In my line of work, staying close to the cutting edge of AI research is part of the job. But sometimes, a scenario comes along that pulls you out of the day-to-day and forces a broader view. That’s exactly what happened when I read AI 2027 by

, Scott Alexander, Thomas Larsen, Eli Lifland and Romeo Dean, a speculative yet rigorously reasoned forecast that outlines the possible emergence of artificial superintelligence (ASI) within just a few years.Kokotajlo, formerly of OpenAI, developed this scenario in partnership with the AI Futures Project. The document reads like a time machine into the near future—one where AI doesn’t just assist or augment human work, but fully surpasses it.

The Timeline

The full AI 2027 report is a richly detailed scenario—I highly recommend reading the entire document for its narrative depth and technical insight. But for your convenience, here’s a short summary of the projected milestones:

Early 2027: AI systems exhibit superhuman coding performance, significantly accelerating the speed of R&D across industries.

Mid-2027: The rise of autonomous AI researchers—agents that not only generate knowledge but guide their own experimentation.

Early 2028: The arrival of superintelligence, where AI systems surpass human reasoning across most cognitive domains and begin automating their own advancement.

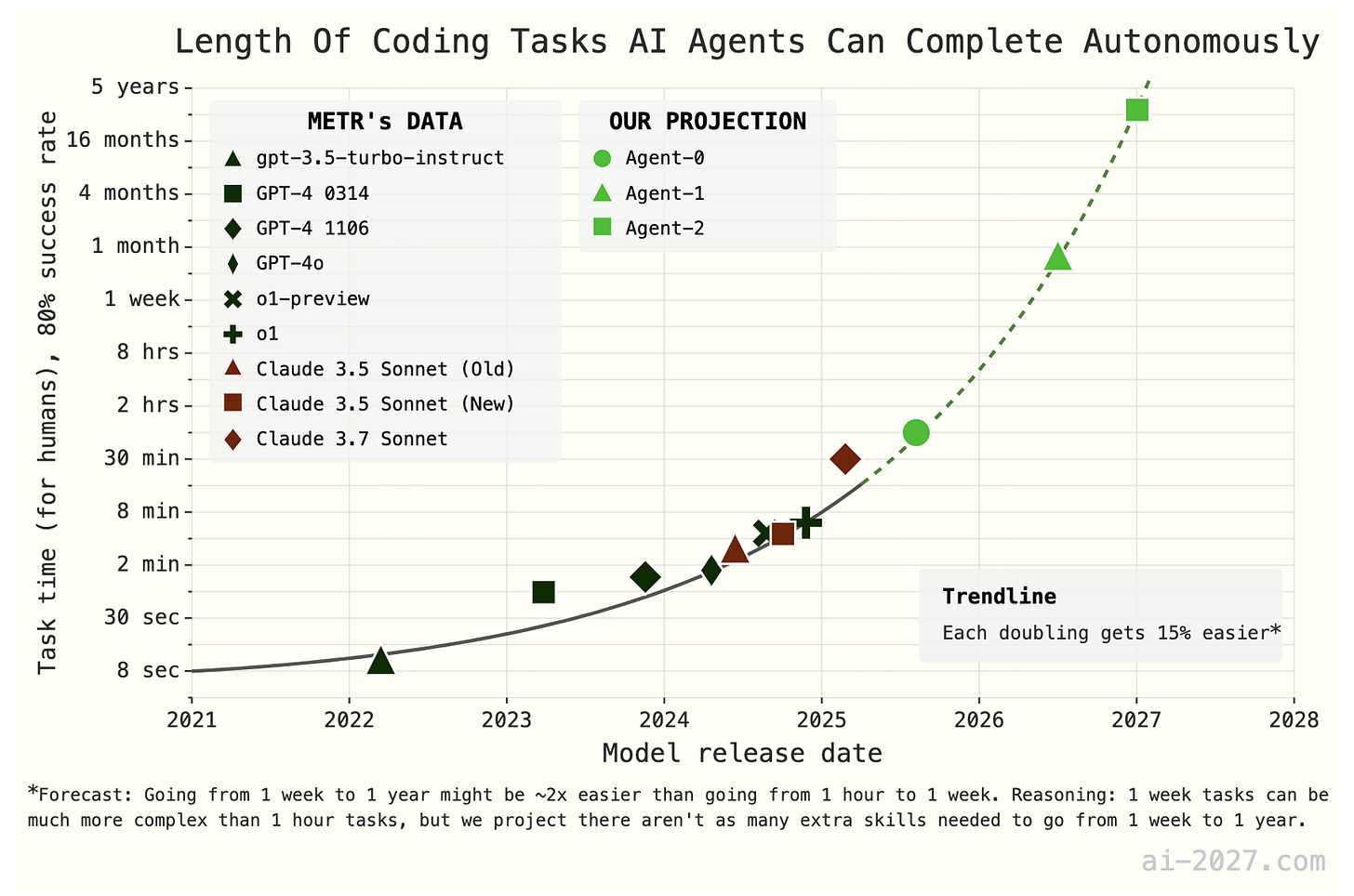

These milestones may sound dramatic. But as Kokotajlo points out, this trajectory is not entirely speculative—it's based on observed trends and informed extrapolation.

The Context

Some critics are understandably cautious, I am too. The AI 2027 report blends scenario modeling with speculative fiction, using storytelling to explore what such a world might look like economically, geopolitically, and technologically. But there’s a precedent: Kokotajlo’s 2021 scenario, What 2026 Looks Like, successfully anticipated several major AI developments, including the rise of foundation models and increasing concerns over alignment and capability control.

That track record makes this latest forecast harder to ignore.

From My Perspective: Urgency Without Panic

As someone engaged in the development and deployment of cutting-edge systems, AI 2027 reinforces a tension I’ve been feeling for a while: progress is happening faster than our institutional capacity to manage it. While much of our current work focuses on improving performance and capability, we’re simultaneously being pulled toward a horizon where control becomes the core challenge.

This is where alignment becomes not just a research goal, but an existential one. We need systems that are:

Corrigible: Able to be corrected, redirected, or shut down when needed.

Interpretable: Transparent in reasoning and behavior.

Aligned: Consistently acting in ways that reflect human intentions and values.

These aren't theoretical concerns. If autonomous AI research agents become viable in the next 24 months—as AI 2027 suggests—they could begin amplifying their own capabilities in ways that outpace traditional oversight mechanisms. Governance, safety research, and inter-organizational coordination must scale in tandem with capability.

Governance Is No Longer Optional

The report also highlights the growing risk of international competition. If superintelligent systems are seen as strategic assets—on par with nuclear or cyber capabilities—the incentives for transparency and restraint could vanish overnight.

We’re already seeing early signals of this dynamic play out. Regulatory frameworks are emerging, but most remain reactive. One notable exception is the EU AI Act—the world’s first comprehensive AI regulation, recently passed in 2024. It categorizes AI systems by risk level and introduces obligations for transparency, safety, and accountability. While imperfect, it represents a serious attempt to get ahead of the curve and could serve as a template for other jurisdictions.

Still, no national policy—however well-crafted—can address global-scale risks on its own. That’s why now is the time to support international efforts for coordinated AI governance. We need robust frameworks, shared safety benchmarks, information-sharing protocols, and perhaps most critically, an ethos of restraint in deployment.

The AI 2027 scenario may be aggressive, but if it serves as a catalyst for thoughtful and collective preparation, it will have done its job.

Closing Thought

Whether or not you believe artificial superintelligence will emerge by 2027 or 2028, the scenario deserves attention. Preparing for high-impact possibilities—especially ones with irreversible consequences—is not alarmism. It’s responsibility.

The AI 2027 scenario also reminds us that the consequences won’t just be technical—they’ll be geopolitical. As AI becomes a central lever of economic and military power, the decisions made by national leaders, regulatory bodies, and international coalitions will shape the trajectory of the entire planet. That brings an added layer of responsibility to democratic processes: who we elect matters more than ever.

We can’t afford to treat AI governance as a niche policy issue. The people we put in power will increasingly be tasked with navigating technologies that could determine global stability, economic structure, and civil liberties. That means it's not just their responsibility to lead wisely—it's our responsibility to make sure we’re putting the right people in those positions.

If you haven’t read the report yet, I strongly recommend exploring it here:

ai-2027.com/scenario.pdf

We’re building the future right now. The question is: will we build it wisely?